Engage

Choosing an AI open ecosystem to participate in

As part of our tech policy debate series, we focused on AI tooling over the past three months, with guest analyst Mariam Sharia raising key questions in open ecosystems, healthcare and banking/finance. Now, we share how we are thinking through the issues Mariam raised, and in the next few blogs will share practical tools as well as insights into our policy thinking. In Mariam's first blog for us, she discussed the challenges of identifying what AI providers mean when they say they offer an open ecosystem. As we recently drafted our AI governance model at Platformable, we wanted to share our thinking that was inspired by Mariam's article.

Why choose an AI open ecosystem before selecting AI tools

It is no longer suitable to simply choose a tech tool as part of your procurement or tooling decisions. The tools you choose must now play well with others, and this is particularly true with AI. AI needs to connect with your workflows, your datasets, must make use of novel technologies from external providers, and so on. Beyond ensuring interoperability, your tools must also be able to understand a range of open standards and data model standards, and make use of API specification files for example. So rather than choosing an AI tool to use in your internal experiments and proof of concept testing, we propose you first choose an open AI ecosystem to participate in.

The challenges of selecting an open ecosystem for AI

However, there are some challenges, as Mariam points out in our first tech policy debate article. At the time of publication, Mariam noted that vendors like IBM and others were suggesting they have an "open ecosystem" but what they really meant was that they had an ecosystem of integration consultants and other suppliers available to support you to use IBM's closed AI ecosystem tooling. Since then and only announced this week, IBM has moved towards creating a more open ecosystem, via open sourcing some AI tools and through their InstructLab offering via Red Hat. Mariam also pointed to other examples of what she refers to as "open washing" where AI providers promote themselves as open, but use varying definitions of what they mean by that.

There are other challenges that need to be considered besides how some vendors are framing their degree of openness:

New vendor lockin risks: For many organisations, the past ten or so years has been about reducing the vendor lockin risks, especially from cloud and API management services. Many organisations were stuck with vendors that had high licence fees and prevented interoperability with other services and tooling not part of the walled garden of their core vendor. Over recent years, many organisations have sought to extricate themselves from these relationships. This is also observable in the wider API industry where there is a growing recognition that organisations might be using multiple API gateways and cloud providers, and also need to align with various API protocols, standards and architecture patterns. As a result, more forward thinking API solutions are ensuring they provide an interoperable platform that allows for this high degree of flexibility.

The same is needed with AI open ecosystems, although there are some signs that not all AI providers are focused on this. In an article on The Conversation by Tim O'Reilly, Ilan Strauss, Mariana Mazzucato and Rufus Rock, the authors argue for open ecosystems , especially at this stage of AI's novelty, as "we'll see more innovation if emerging AI tools are accessible to everyone, such that a dispersed ecosystem of new firms, start-ups and AI tools can arise."

Emerging monopoly risks simply aimed at selling you more compute: There is a growing concern that a monopoly network is emerging amongst some leading cloud providers and computer chip manufacturers. I pointed to this concern in December 2023, during my keynote at apidays, where I cautioned that there were two main advocates for AI:

- Investors who were keen to see others buy-in so they get a return on their initial investment as value is inflated, and

- Cloud providers who have a market interest in AI, as it uses more compute power requiring more cloud services to be purchased.

Six months after I cautioned that this was a potential threat, key governments, including the UK's Competition and Markets Authority (CMA), have uncovered that this is indeed a significant risk. Speaking at a conference in Washington DC, the UK's CMA Chief Executive Officer Sarah Cardell warned:

CMA identified 3 risks that could destroy the emergence of open AI ecosystems (CMA refers to foundation models which they define as "large, machine learning models trained on vast amounts of data"):

- "Firms controlling critical inputs for developing foundation models may restrict access to shield themselves from competition

- "Powerful incumbents could exploit their positions in consumer or business facing markets to distort choice in foundation models services and restrict competition in deployment

- "Partnerships involving key players could exacerbate existing positions of market power through the value chain".

As Mariam notes at the end of her tech policy debate piece, noted author and AI thought leader Ted Chiang warned that the underlying goal of much of AI is to simply sell businesses more compute resources.

I have been thinking about this a lot lately as I have increasingly focused on using LinkedIn to connect with a potential audience to discuss our work on supporting open ecosystems, now that Twitter is completely dead and deeply questionable from an ethical point of view. One bit of advice that everyone with LinkedIn skills seems to know about is that to 'game the algorithm' it is preferable not to include external links in your posts, as LinkedIn favours content that keeps you on the platform. That is, LinkedIn will show your post to a smaller proportion of your followers if it includes external links1.

LinkedIn is owned by Microsoft. Ed Zitron exposed a similar business strategy at Google, where search was made more complicated in order to encourage visitors to stay on search pages for longer and therefore see more ads, part of a technology trend known as the 'rot economy'. So a key business strategy of two of the biggest cloud providers is to keep you using their services even if you don't need to or should be getting on with other actual work. How can we trust that they won't use the same strategy to increase the use of compute resources when offering us AI? (For any SaaS business, a key challenge already for building a sustainable business is the significant chunk that is spent on cloud services.)

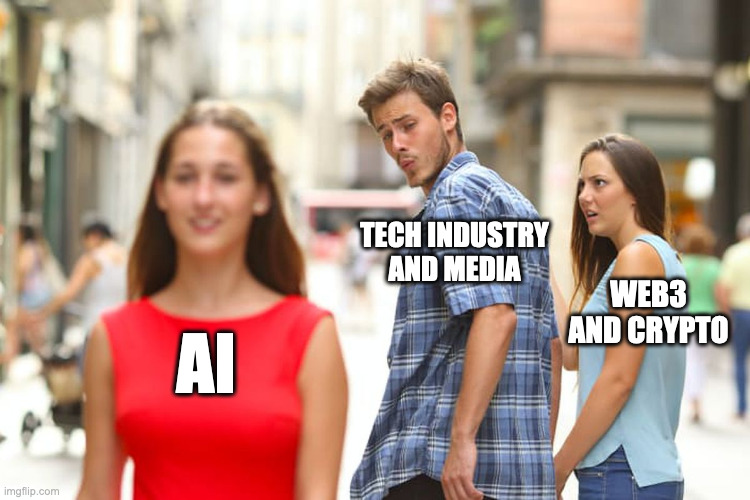

Buying into the hype: A final challenge for many when considering which open API ecosystem to participate in is the confusion that is resulting in an overhyped media reporting and the cult of tech personality. Tech media and consultancy groups have not learnt any lessons from previous cycles of tech hype. During the web3 and metaverse hype, most large consultancy companies came out with white papers and reports urging enterprises and businesses of all sizes to start experimenting and investing in building virtual worlds. There was a similar push for cryptocurrency, and in areas like urban planning we saw a push for autonomous vehicles which would have returned city planning to a car-first planning mentality that we had only just swung away from. These all ended up as dead ends.

While media and consultancy firms sell their perspectives, businesses are stuck with over-investment in technological strategies that provided no real value, or that have significant dangers. The same risk is emerging with AI, with little nuance on the balance that businesses need to take and the priority areas to focus on.

But one aspect of the media hype we particularly caution against is the cult of personality that leads to treating someone as a tech leader messiah. This is a particular problem we are seeing with Sam Altman, following in the footsteps of Sam Bankman-Fried, Elon Musk, and the founders of WeWork, Uber, and Theranos (basically anyone who has been singled out on the cover of Forbes magazine in the past ten years).

At Platformable, I caution against adopting OpenAI in particular. (At the time of drafting much of this post over a week ago, this would have seemed a much more controversial statement to make!) There are multiple governance, due diligence and risk management concerns with OpenAI:

- They refuse to explain the data they have trained their technology on2

- They had a board governance issue last year that was particularly opaque and still has not been clarified and in just the last few weeks, key governance and safety leaders within the organisation have resigned

- They changed their mission statement to suddenly move from a non-profit to for-profit model

- They quietly rewrote their terms of service to suddenly allow use of Generative AI for weapons-related purposes

- They created deepfake voice technologies which have virtually no non-criminal use cases

- They are potentially breaching copyright and are being sued on multiple fronts for doing so and have not addressed this publicly; and

- Their CTO is unable to explain what video datasets they use and whether they have received consent for doing so.

On a personal front, from a cult-of-personality perspective, Sam Altman has led at least two highly exploitative initiatives that would not adequately pass trade practices and commitments to prevent exploitation of workers (often termed as anti-modern slavery policies in the EU and UK): he promised cryptocurrency to desperate Global South populations and arranged it in such a way that his business did not have to take responsibility on delivering the promised financial incentives, and then did not secure the biometric identity data sufficiently so there is now a blackmarket of biometrics of those who provided their highly personal data; and OpenAI trained video data using African moderators and paid them extremely low wages to view violent and traumatic video and other content.

While preparing some of my arguments against both OpenAI and Sam Altman for this post, Ed Zitron published a comprehensive piece, which is worth reading. And just this week, news was released that shows more than dubious behaviour around using an actor's voice without consent for one of ChatGPT's latest features, while ignoring any response to the flurry of resignations of the security and governance leadership at the company.

From a risk management and due diligence point of view, looking at the management team, the compute costs of using OpenAI, and assessing the true value of what can be created using these tools, any organisation would have to come to the conclusion that OpenAI is not an appropriate ecosystem to participate in with business data nor at a production level.

It is disturbing that we at Platformable are one of the few businesses and analyst teams that will guide our clients and readers upfront about this, especially given the repeated cycles we have seen, perhaps most notably recently with both Sam Bankman-Fried and Elon Musk, where the cult of personality and media portrayal has encouraged oversized investments in technology that has not worked and is detrimental to business and society. Sam Altman, Elon Musk, Sam Bankman-Fried have all repeatedly been caught lying directly to investors, users and the general public. I have not seen the larger IT industry or API consultancy groups use any of their analytical skills on discussing this.

When starting with AI, we still believe that data and API governance comes first: these are needed to mature sufficiently before any business can truly leverage AI opportunities. There are other foundational elements we think should be in place as well, including due diligence around use of copyright, exploitation of workers, environmental cost of compute resources, impacts on user experience, and so on. We believe there are potential use case for AI, including perhaps generative AI, but at present many businesses and organisations are stuck between this overly-hyped wind-up of AI, and a fear that they will fall behind if they don't act now. We suggest a more steady progress: let's look at your data systems, API infrastructure, customer needs, and greatest challenges for automation first and from there work out how AI can support you.

Thanks to MIke Amundsen for a fascinating and fun lunch which inspired the meme created for this article, although I am sure someone else already thought of it!

Article references

I still don't understand why if I have followed someone on social media that doesn't automatically mean that I will see all their posts in my feed. I would think ideally a feed was all of the posts from everyone you follow, in chronological order? There is no actual feature or view of that, it seems.

The U.S. National Telecommunications and Information Agency noted "OpenAI provided a technical report for GPT-4 that – beyond noting that GPT-4 was a 'Transformer-style model pre-trained to predict the next token in a document, using both publicly available data (such as internet data) and data licensed from third-party providers” – declined to provide “further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.' AI System Disclosures, March 27, 2024

Mark Boyd

DIRECTORmark@platformable.com