Understand

Product or Feature Idea: AI Blocker

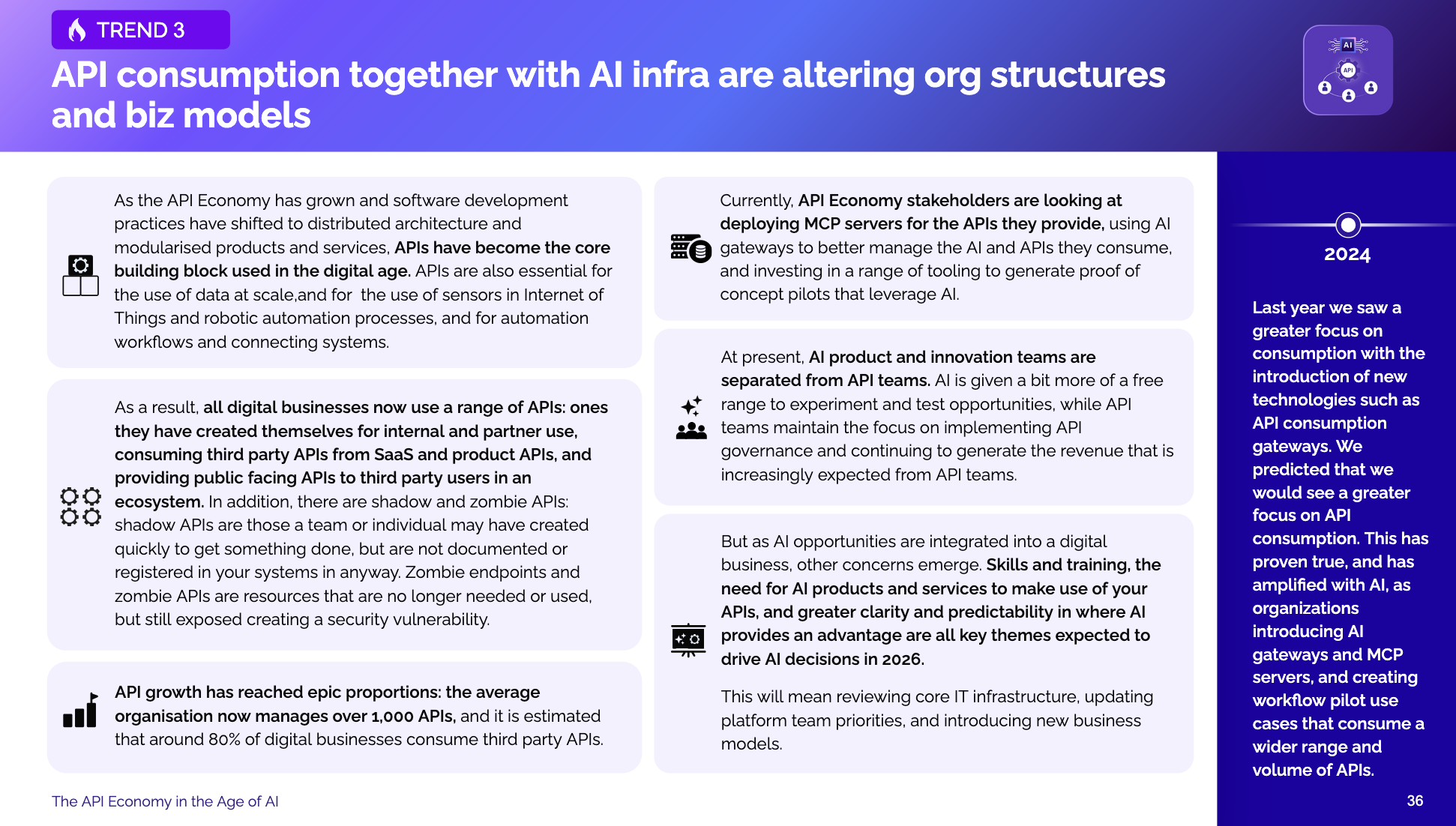

In our latest State of the Market report (produced with apidays and sponsored by Boomi), we identified five emerging trends that we expect to see shape the API economy in the year ahead. Given the widespread focus on AI, the API Economy in the Age of AI report also focused on how AI might play into each of these trends.

Last year, we discussed the growth of API consumption as an area of increased focus (which we predicted accurately!), and this year, we take that further, predicting that, into 2026, API consumption patterns together with AI infrastructure implementations will alter organisational structures and business models.

From an organisational structure standpoint, we expect to see the early proof-of-concept and innovation AI teams folded into wider API teams.

From a business model point-of-view, we expect to see FinOps become more closely tied to API design practices so that cost estimates of AI infrastructure requirements are used to determine whether new products and features should go ahead, based on the RoI in this new cost environment.

We also expect to see more usage-based pricing. Personally, I wouldn't be surprised if we first see a complicated range of flagfalls and usage-based ladders first introduced and then this get whittled down to something more simple over time (as has occurred previously with API pricing experimentation)1.

In our predictions, we also stated that we expect to see a growing number of non-AI related product and feature offerings emerge. In this post, let's dig into that forecast a little.

If you are an API management vendor or one of the new startups offering AI gateways (which we track on both the API landscape and the beta-version AI landscape), we suggest you package your features in a way to offer an AI blocker product. After all, AI gateways aim to manage the flow of AI bots and API calls into and out of an organisation's systems, so with a little tweaking, this could be marketed as a standalone AI blocker.

If you are an API tools provider, SaaS or digital business, or a consultant, we think now is time to also ensure you have a product range that includes products that specifically do not use AI, and its worth marketing those non-AI products as much as you might be doing when you offer AI features.

Drivers of demand for non-AI solutions

We see six key drivers for creating non-AI products:

- To avoid business risks

- To reinforce against security threats and vulnerabilities

- To strategise against financial volatility

- To meet consumer demand

- To leverage economic diversification

- To future proof your organisation.

To avoid business risks

Over the last year, we have seen most AI tools providers, and big tech with AI features shoehorned in, updating their terms and conditions to allow access to a user's data for AI training purposes. LinkedIn for example, quietly introduced a right for themselves to train on your data (which you can opt out of by selecting Settings -> Data privacy -> Data for Generative AI improvement). This was done without fanfare and was an automatic opt-in process.

We have also seen a number of AI tools providers argue that copyright should not be applied in order to make more data available for AI training. Multiple AI tools have been discovered using copyrighted work without purchase and licensing, and AI tools have been shown to work around robots.txt and other website terms of use policies that forbid scraping for AI, proving that these AI tools providers will not respect your business or organisational data if they can gain access.

This presents a number of business risks: some businesses and organisations are cautious of using AI because of the fear that their commercial-in-confidence data and information will be sucked up and used in AI training without their permission, or when AI tools implement an opt-out process without informing them.

This concern can be seen in our survey results on AI Governance, where almost 6 in 10 apidays respondents noted that their organisation had specific rules around what AI tools could be used, and 44% had policies defining what business data could be used in AI. This mirrors data shared by Laura Alix in BankDirector, where she notes 66% of banks surveyed are currently drafting acceptable use policies for AI.

For those who do leverage AI tools from vendors that have used copyrighted materials, your business risk is that the tools you embed in your workflows will continue facing legal battles around the use of the data, which may impact on the availability of the AI in future, or result in unexpected changes to the large language models (LLMs) you are using, or cause the AI tool to increase their prices to cover legal liabilities, thereby impacting your production pipeline.

Recent studies have also shown that it can take as little as 250 error-filled documents to destroy/"poison" an LLM. LLMs used in your production pipelines may be corrupted, impacting on the delivery of your services in the future.

Given these risks, we believe some businesses will seek assurances that the SaaS, API tooling, and other digital services they use DO NOT make use of AI.

To reinforce against security threats and vulnerabilities

We believe there will be interest in using non-AI tools from the security and compliance conscious, especially those who are worried about shadow AI, that is AI usage that has not been approved or does not meet the organisation's approved uses for AI or approved AI tools. This may be difficult to stop, of course, but if AI blockers are in place, at least it would take concerted effort for staff to copy data from business systems into their own devices, or into a VPNed workspace for example, in order to apply AI tooling on those datasets.

An IBM survey found that one in five organizations surveyed had experienced a cyberattack because of “shadow AI” security issues, costing, on average, $670,000.

In addition to the security risks, where the data being used in AI is sensitive, compliance risks also increase. Aditya Patel at Cloud Security Alliance noted that about 38% of employees surveyed had shared confidential data with AI platforms without approval. This could lead to fines for the business if that data becomes more publicly accessible, or if auditing shows that the data has been shared outside of defined governance policies.

Strategist and specialist in African-built financial infrastructure, Sidney Essendi, thinks global security and compliance risks from shadow AI are being replicated at the local level, highlighting that in Kenya, for example, while 80% of Kenyan bank leaders point to potential benefits from generative AI, only 20% say they are prepared, creating a "fertile ground for shadow AI".

To strategise against financial volatility

AI tools providers are still experimenting with their business models, and are subject to sudden fluctuations in pricing.

In response to sudden price changes by core AI providers, other businesses offering specific tools based off these LLMs may need to change their pricing structures quickly, which in turn could impact your AI use cases. For example, when Anthropic changed its pricing model in July, a number of coding assistants like Cursor had to reflect those pricing changes in their own offerings. Swedish coding assistant Lovable, also later changed its pricing model to something it calls "dynamic pricing", that is, "each message and response cost is based on the complexity of the message; so some messages and responses cost less than 1 credit, and some, if quite complex, cost more." In part, this appears to be a response to the higher costs they are seeing in using the LLMs of the big AI providers. And all of these have increasing cloud costs to contend with as well.

For any business that is seeking to build on top of AI tooling, whether by connecting AI tools directly into their value chains as external API web services surfaced in their product or to power their features, or as a sub-process/input source within an automated internal workflow, it will be important to estimate how much this will cost now, and at what threshold level it does not become valuable if the underlying pricing model changes. For example, a feature or workflow built using the AI tooling may have a positive return (in customer revenue or efficiencies) on investment at present, but if your usage extends beyond a certain number of hours per month, or if the AI tool provider changes their monthly pricing, suddenly that use case may not be worth the expense.

This means that design decisions need to incorporate more FinOps thinking upfront, and that FinOps models for each feature or workflow using AI will need to be reconsidered whenever there is a pricing change or change in level of usage (since with the last raft of pricing changes, Anthropic argued for example, that higher costs would only effect "power users", causing what Futurism referred to as a user meltdown).

To meet consumer demand

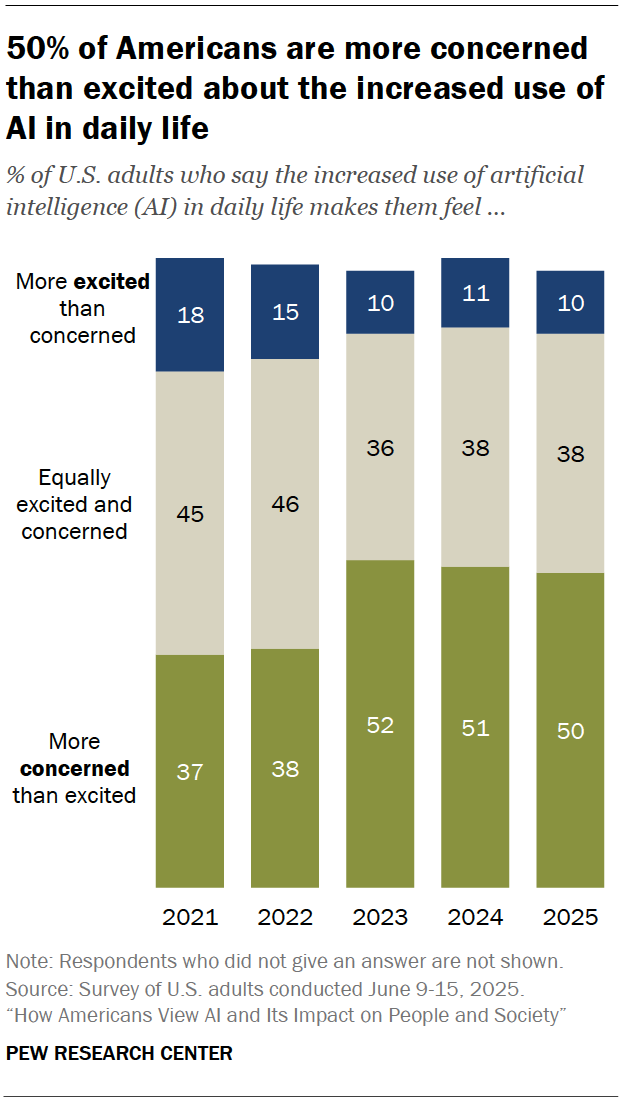

Research in June 2025 by the Pew Research Center showed that in the US, 50% of Americans surveyed are more concerned than excited about the increased use of AI in daily life (mind you, 73% would also be willing to allow AI to assist them with day-to-day tasks "at least a little").

Backlash against businesses that focus too deeply on AI is also rising, although it is unclear whether this always has a consumer impact. Buy Now Pay Later provider Klarna moved to AI chatbots for customer service, citing increased ability to meet customer onboarding needs and so on at the time, and then reversed this decision eight months later, saying the customer quality suffered too much. DuoLingo on the other hand faced an initial backlash when they announced going "AI-first" but that dwindled and now the company reports surges in profits from the strategy.

A recent US Consumer Reports guide discusses how to turn off AI features on common bigtech software, demonstrating growing interest in how to access tools without using AI.

To leverage economic diversification

If everyone is investing heavily in AI, there is a high risk that everyone is shovelling money into an unsustainable, non-viable technology (see the discussion below on future-proofing your organisation), but it can also lead to heavy commodification of AI tooling. There are regular tests done between the various LLMs and other AI offerings, such as coding assistants, to determine the most efficient, most accurate, fastest, most performant at scale, and so on. But overall, the outputs for standard use cases may be quite uniform, leading to reduced unique value that your business can offer your customers by integrating AI features that your competitors are also offering. (And if it is a spreadsheet feature you are offering, then you congratulations, you are offering your users an experience that has around 57.2% accuracy. In a spreadsheet.)

Meanwhile, UK public services are trying to embed AI in everything, and Europe keeps proposing new budgets for AI approaches, with many of the current funding rounds also supporting grant applications that sprinkle in the magic two letters. What is worrying is that there is limited diversification, with generative AI being the main focus. A study on AI's value in agricultural solutions in Spain documents a number of case studies, but from my reading none of that is generative AI. Also if UK, US, and China are all focused on AI strategic goals, wouldn't there be a place to hedge some bets by also investing in non-AI solutions?2 Meanwhile, all of US' GDP growth at present is due to investment in AI.

These kinds of geopolitical risks at the macroeconomic level are replicated at the local level. We believe diversifying by not offering AI could be a solid business strategy. We already see it in a lot of how Cloudflare are approaching AI. Yes, they have multiple AI offerings, but they are also providing non-AI solutions including AI Crawl Control and Web Bot Auth, for example.

Others like DuckDuckGo offer features to turn off AI in image search for example, and Youtube has changed its monetization policies to restrict sharing ad revenue on AI-generated content.

To future proof your organisation

At a time when it feels like AI is being forced into every product and service, some analysts like Anil Dash are noting that developers and those with more experience see some value from AI, but that more work needs to be done on developing the evidence base around potential use cases.

Even if AI does generate expected value, there is growing concern that it won't do so in fast enough time to provide a return on current investment.

If you value the critical thinking skills of your team or in your customers, you may also be less willing to overly invest in AI solutions in your product offerings.

AI Blocking as a Business Product Strategy

As we mention, in many ways an AI gateway product IS a kind-of AI blocker, or at least able to control what AI can get through to your systems, what it can access, and how much of your data it can devour. What we are suggesting here is to package up those features in a way that makes it clearer to potential users that it is an AI-blocking product.

More broadly, what we believe for businesses, multilateral organisations, research institutions, and governments is that the AI policy and investment focus is severely unbalanced.

In the past, and in our recent reports, we have argued for a return to design-thinking best practices to understand real needs and opportunities. Too many AI experiments have started from a desire to use the tech rather than a focus on a specific problem statement and user need, and when that is done, it has jumped straight to external consumer needs, rather than starting with looking at the challenges internal team members face and seeing if they can be solved.

For example, when working with organisations on their API governance, a simple need is to be able to line up different public facing APIs side by side and see what informal style and design decisions are being made and where duplicative data models are in place. This helps create a style guide based on what developers are already doing, and to quickly see where common deficiencies can be addressed. We built the API Harmonizer using Lovable to create this, and what's great is that while we used an AI tool to build the app, the app itself doesn't use any AI to line up the APIs and identify commonalities and differences. We are already using this in training materials and with clients.

We have also worked with clients on — and continue to argue for — foundations to be built more solidly: focus on the data and API governance infrastructure that is needed if AI is going to generate any value (also: document your workflows). And we recommend more multi-disciplinary AI & API teams: with expertise in compliance, FinOps, product management, and engineering working together from the start.

But now we are also saying it is ideal to have some product offerings which do not rely heavily (or at all) on AI or a future AI efficiency or effectiveness return.

At Platformable, we have always come from a data-informed3 and evidence-based position when developing policy and strategy. We understand that this then gets fed through politics, and decisions are made that might not align with the evidence. But AI represents an almost complete 180-degree turn on that process: politics has driven investment and strategy first, and now there is an almost desperate attempt to find where the evidence of value can be demonstrated.

The recent release of Sora-24 is a good encapsulation of that approach with AI. It represents all that is troubling with AI implementations done badly: a lack of a business case or consumer need, no clear business model, use of copyrighted works without permission, lack of governance, lack of ethical consideration of the use of the tech to create deepfakes and non-consensual porn, encouragement of dark patterns and addictive social media behaviours, and exorbitant use of energy and consumption power without any prior forecasting or impact assessment. It still shocks me that anyone partnering with OpenAI can think that if they behave in this way with Sora-2 that it is not representative of how they approach any other AI integration or project. We have been saying "culture eats strategy for breakfast" for over a decade now, but I guess we have also been saying "some people delude themselves into thinking it might work for them but it never does... but it might work for us".

As the above six drivers continue to grow over the next 6-12 months, savvy digital businesses will move to offer both AI and non-AI products and features. Multilateral organisations, governments, and industry associations should seek to foster open digital ecosystems by diversifying digital adoption and providing investment and capacity building resources that do not seek to embed AI into every strategy, tactic or policy solution.

For more API Economy and AI trends, download the free State of the Market Report from apidays and Boomi.

Article references

Years ago, we worked with IFC on a process to help banks calculate their API pricing strategy. We then went on to build our own prototype at https://pricingtool.platformable.com/ We'd love to work with partners who were interested in updating this prototype to cover how APIs should be costed. Please reach out via the calendly form below or via our contact page.

It is like governments are not learning anything from the work of Maria Farrell and Robin Berjon discussing single point of failures and concentration of technologies in the essential reading: https://www.noemamag.com/we-need-to-rewild-the-internet/

Includes qualitative data, focus group feedback, collection of anecdotal stories from informants, etc.

I'm not going to link to it. Also, an AI company focusing on creating a social media site and business model suggests they have no other long-term revenue generating opportunities, I have no idea why this is a priority for an organisation that is partnering with governments, banks, and health providers around the world.

Mark Boyd

DIRECTORmark@platformable.com